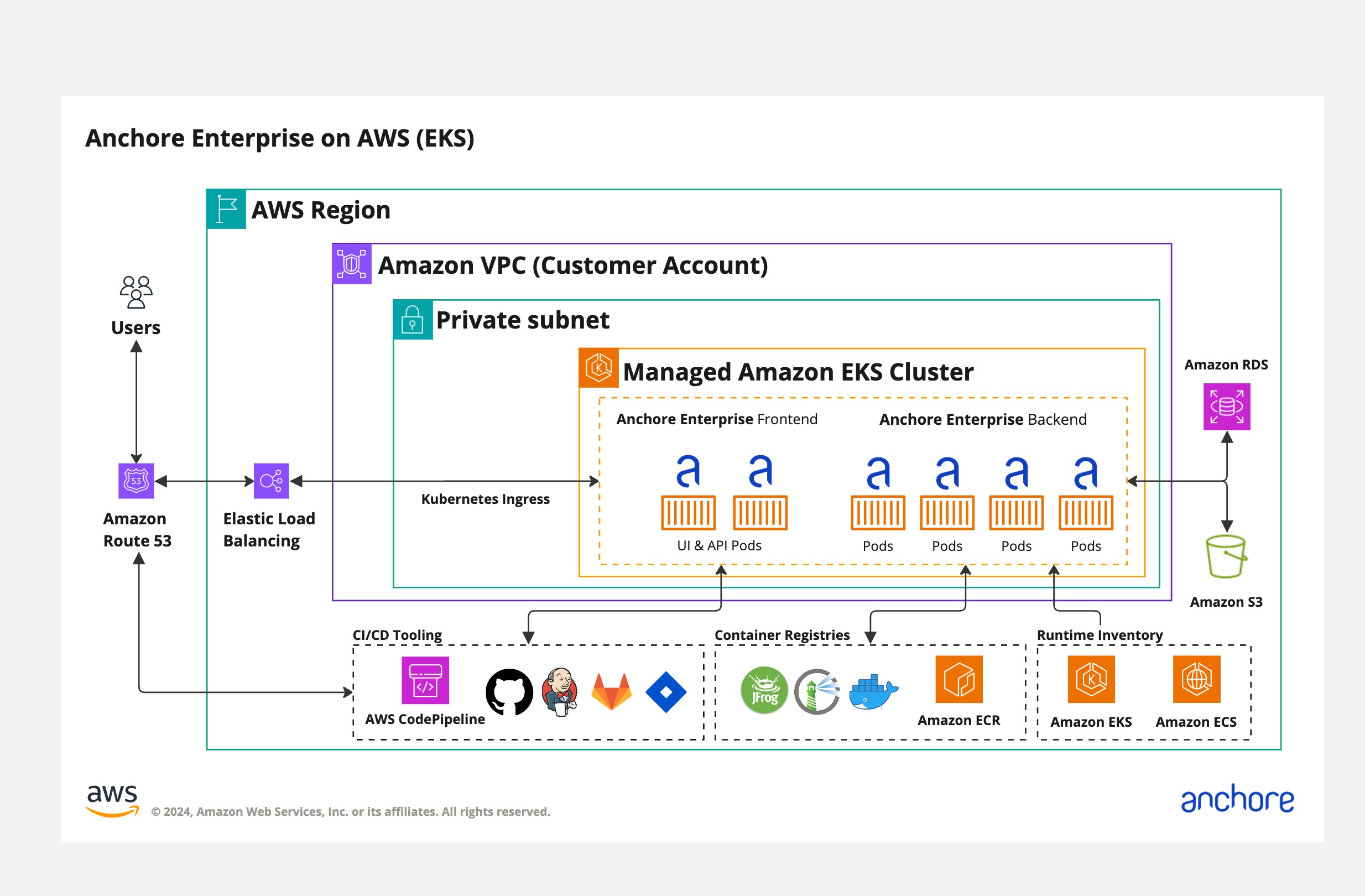

This section provides information on how to deploy Anchore Enterprise onto Amazon EKS. Here is recommended architecture on AWS EKS:

Prerequisites

You’ll need a running Amazon EKS cluster with worker nodes. See EKS Documentation for more information on this setup.

Once you have an EKS cluster up and running with worker nodes launched, you can verify it using the following command:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-2-164.ec2.internal Ready <none> 10m v1.14.6-eks-5047ed

ip-192-168-35-43.ec2.internal Ready <none> 10m v1.14.6-eks-5047ed

ip-192-168-55-228.ec2.internal Ready <none> 10m v1.14.6-eks-5047ed

In order to deploy the Anchore Enterprise services, you’ll then need the Helm client installed on local host.

Deployment via Helm Chart

Anchore maintains a Helm chart to simplify the software deployment process.

To make the necessary configurations to the Helm chart, create a custom anchore_values.yaml file and reference it during deployment. There are many options for configuration with Anchore. The following is intended to cover the recommended changes for successfully deploying Anchore Enterprise on Amazon EKS.

Configurations

The following configurations should be used when deploying on EKS.

RDS

Anchore recommends utilizing Amazon RDS for a managed database service, rather than the Anchore chart-managed postgres. For information on how to configure for an external RDS database, see Amazon RDS. It is suggested to allow the storage to automatically increase as needed.

S3 Object Storage

Anchore supports the use of S3 object storage for archival of SBOMs, configuration details can be found here. Consider using the iamauto: True option to utilise IAM roles for access to S3.

PVCs

Anchore by default uses ephemeral storage for pods but we recommend configuring Analyzer scratch space, at a minimum. Further details can be found here.

Anchore generally recommends providing EBS-backed storage for analyzer scratch of the gp3 type. Note that you will need to follow the AWS guide on storing K8s volumes with Amazon EBS. Once the CSI driver is configured for your cluster, you will then need to configure your helm chart with values similar to this:

analyzer:

scratchVolume:

details:

ephemeral:

volumeClaimTemplate:

metadata: {}

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

# must be 3xANCHORE_MAX_COMPRESSED_IMAGE_SIZE_MB + analyser_cache_size

# Setting this to 100G would mean the largest image you can scan is 30G (not counting analysis cache if you choose to configure that)

storage: 100Gi

# this would refer to whatever your storage class was named

storageClassName: "gp3"

Ingress

Anchore recommends using the AWS load balancer controller or EKS Auto Mode (https://docs.aws.amazon.com/eks/latest/userguide/auto-configure-alb.html) for ingress.

We also suggest using a vanity domain (anchore.mydomain.com in the example below) over TLS with Route53 & ACM however this goes beyond the scope of this document.

Here is a sample manifest for use with the AWS LBC or EKS Auto Mode ALB ingress:

ingress:

enabled: true

apiPaths:

- /v2/

- /version/

uiPath: /

ingressClassName: alb

annotations:

# See https://github.com/kubernetes-sigs/aws-load-balancer-controller/blob/main/docs/guide/ingress/annotations.md for further customization of annotations

alb.ingress.kubernetes.io/scheme: internet-facing

# If you do not plan to bring your own hostname (i.e. use the AWS supplied CNAME for the load balancer) then you can leave apiHosts & uiHosts as empty lists:

#apiHosts: []

#uiHosts: []

# If you plan to bring your own hostname then you'll likely want to populate them as follows:

apiHosts:

- anchore.mydomain.com

uiHosts:

- anchore.mydomain.com

Note

There are alternative ways to access services within your EKS cluster besides LBC ingress.You must also configure/change the following from ClusterIP to NodePort:

For the Anchore API Service:

# Pod configuration for the anchore engine api service.

api:

# kubernetes service configuration for anchore external API

service:

type: NodePort

port: 8228

annotations: {}

For the Anchore Enterprise UI Service:

ui:

# kubernetes service configuration for anchore UI

service:

type: NodePort

port: 80

annotations: {}

sessionAffinity: ClientIP

For users of Amazon ALB:

Users of ALB may want to align the timeout between gunicorn & ALB. The AWS ALB Connection idle timeout defaults to 60 seconds. The Anchore Helm charts have a timeout setting that defaults to 5 seconds which should be aligned with the ALB timeout setting. Sporatic HTTP 502 errors may be emitted by the ALB if the timeouts are not in alignment. Please see this reference:

Note

Changed timeout_keep_alive from 5 to 65 to align with the ALB’s default timeout of 60.anchoreConfig:

server:

timeout_keep_alive: 65

Install Anchore Enterprise

Deploy Anchore Enterprise by following the instructions here.

Verify Ingress

Run the following command for details on the deployed ingress resource using the ELB:

$ kubectl describe ingress

Name: anchore-enterprise

Namespace: default

Address: xxxxxxx-default-anchoreen-xxxx-xxxxxxxxx.us-east-1.elb.amazonaws.com

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

*

/v2/* anchore-enterprise-api:8228 (192.168.42.122:8228)

/* anchore-enterprise-ui:80 (192.168.14.212:3000)

Annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

kubernetes.io/ingress.class: alb

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 14m alb-ingress-controller LoadBalancer 904f0f3b-default-anchoreen-d4c9 created, ARN: arn:aws:elasticloadbalancing:us-east-1:077257324153:loadbalancer/app/904f0f3b-default-anchoreen-d4c9/4b0e9de48f13daac

Normal CREATE 14m alb-ingress-controller rule 1 created with conditions [{ Field: "path-pattern", Values: ["/v2/*"] }]

Normal CREATE 14m alb-ingress-controller rule 2 created with conditions [{ Field: "path-pattern", Values: ["/*"] }]

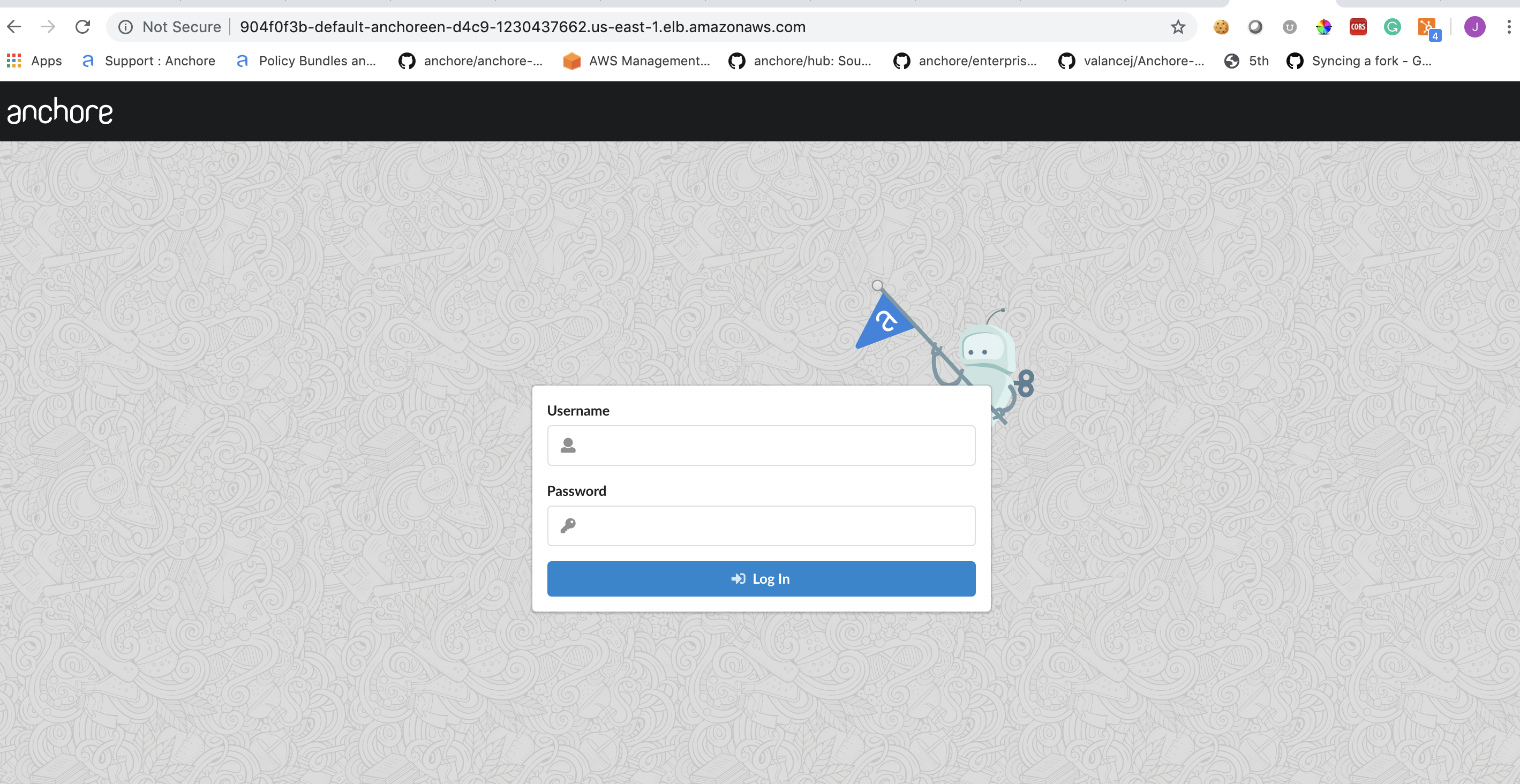

The output above shows that an ELB has been created. Next, try navigating to the specified URL in a browser:

Verify Anchore Service Status

Check the status of the system with AnchoreCTL to verify all of the Anchore services are up:

Note: Read more on Deploying AnchoreCTL

ANCHORECTL_URL=http://xxxxxx-default-anchoreen-xxxx-xxxxxxxxxx.us-east-1.elb.amazonaws.com ANCHORECTL_USERNAME=admin ANCHORECTL_PASSWORD=foobar anchorectl system status